Ma Yi Shen Xiangyang Cao Ying's latest AI review fire!Time to build in March, netizens: must read the paper

Author:Quantum Time:2022.07.13

Baiyou from the quantum position of the Temple of Temple | Public account QBITAI

After the call came out, Professor Ma Yi's AI comprehensive paper was finally released!

It took more than three months to join the neuros scientist Cao Ying and the computer Daniel Shen Xiangyang to complete the collaboration.

According to my description, this paper is to organically combine his "work in the past five years and the development of intelligence for more than 70 years", and also said:

I have never spent so much energy and time in an article.

Specifically, "the basic contour and framework of the principle of computing principles is based on the origin of intelligent origin and the principle of computing principles.

Many netizens were looking forward to the preview on social networks before.

As a result, shortly after today, some scholars said that they would design a new generation model and inspired me.

Let's see what kind of papers this is?

Two principles: simple and self -consistent

In the past ten years, the progress of artificial intelligence has mainly depended on training homogeneous black box models. The decision -making process and characteristic representation are largely difficult to explain.

And this end -to -end rough training not only leads to the continuous growth of model size, training data, and computing costs, but also with many problems in practice.

Lack of richness in the characteristics of learning; lack of stability during training; lack of adaptability, prone to disaster forgetting ...

Based on this background, researchers assumed that one of the root causes of these problems in practice is to lack system and comprehensive understanding of the functional and organizational principles of intelligent systems.

And whether there is a unified method behind this to explain.

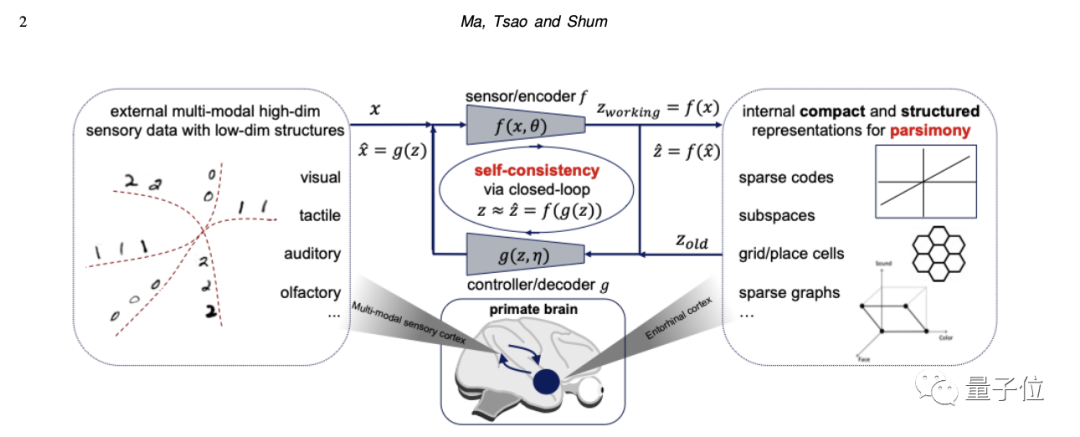

To this end, this article put forward two basic principles -simplicity and self -consistent, and answer two basic questions about learning.

1. What to learn: What are the goals from data and how to measure it?

2. How to learn: How do we achieve such a goal through efficient and effective calculations?

They believe that these two principles restrict the functions and design of any intelligent system, and they can re -express in a measurement and calculation manner.

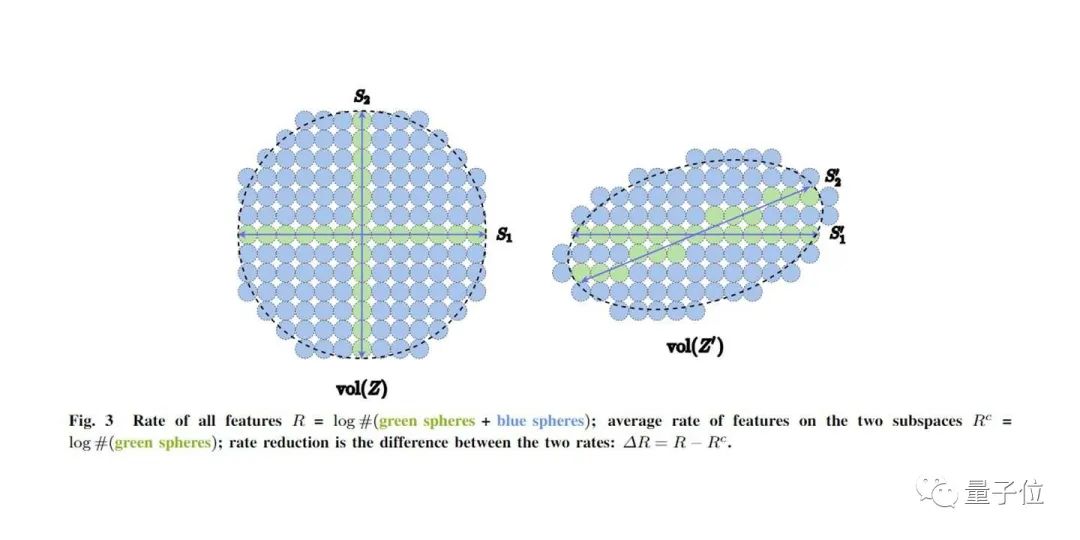

Take simplicity as an example. The foundation of intelligence is the low -dimensional structure in the environment, which makes predictions and generalization possible, which is the principle of simplicity. And how should I measure it? This article proposes a geometric formula to measure the simplicity.

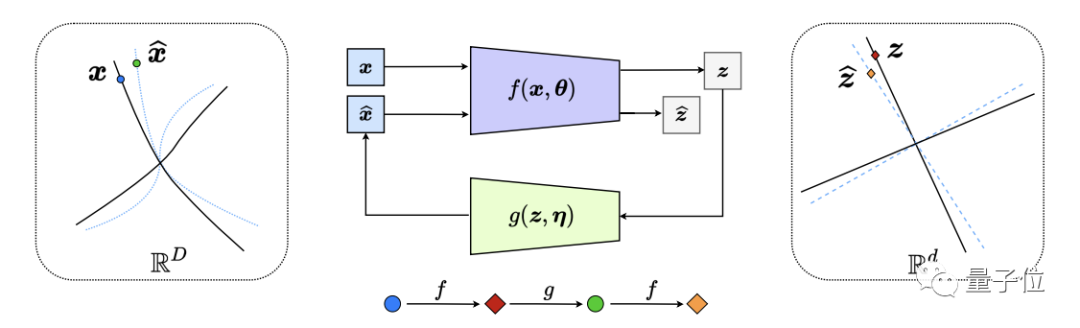

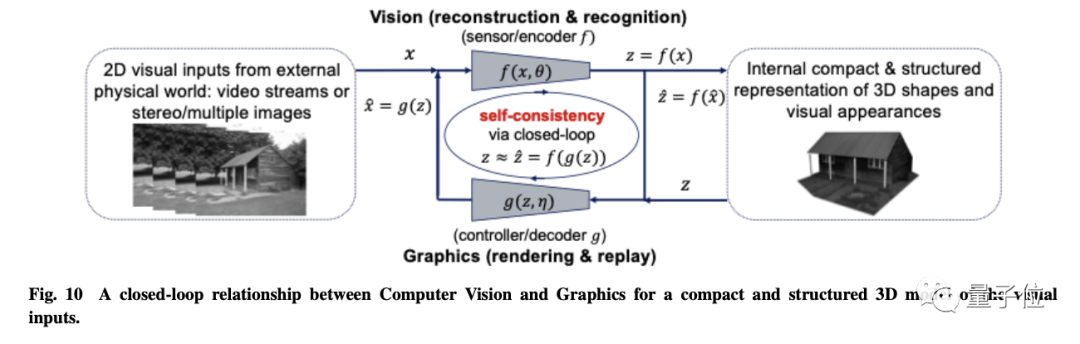

Based on these two principles, a universal architecture of perception/intelligence is obtained: a closed -loop transcription between the compression and generator.

It implies that the interaction between the two should be a pursuit game. In this game, they play the opposite of the combined target function, not an automatic encoder.

This is one of the main advantages of this framework. It is the most natural and effective through self -supervision and self -criticism.

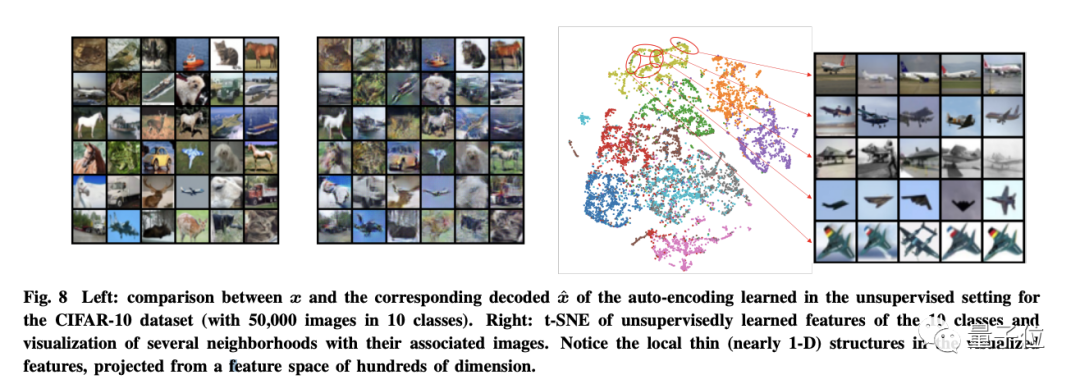

Fundamentally, this framework can be extended to a completely unsupervised environment. At this time, you only need to treat each new sample and its incremental amount as a new tired.

Since the type of supervision type+self -criticism, such a closed -loop transcription will be easy to learn.

It is worth mentioning that the structure of this learning characteristics is similar to the structure of the category selection area observed in the brain of spiritual long animals.

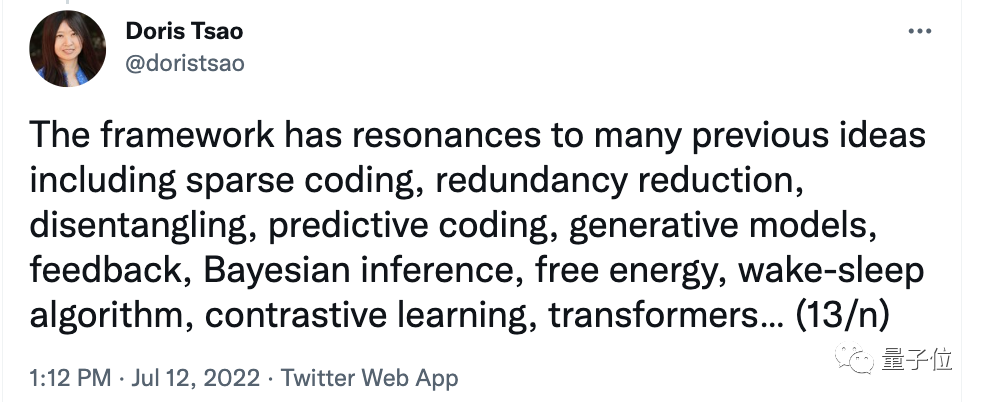

One of the authors Cao Ying said that this framework is similar to many previous ideas, including prediction coding, comparison learning, generating models, transformer ...

In addition, they also gave some new directions, such as the closed -loop relationship between CV and graphics.

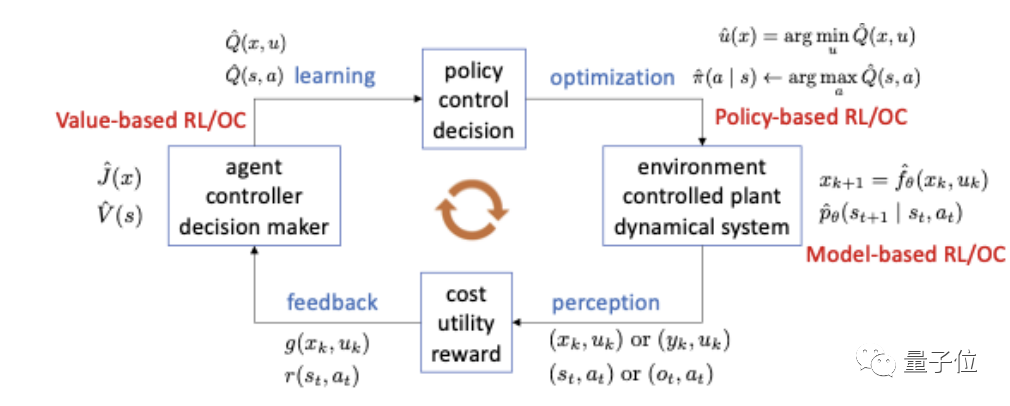

Finally, integrate together, an autonomous intelligence, the best strategy to learn a certain task is to integrate the perception (feedback), learning, optimization, and action into a closed loop.

It uniformly explains the evolution of modern deep networks and many artificial intelligence practice. Although the entire article takes the modeling of visual data as an example.

However, researchers believe that these two principles will uniformly understand the widely autonomous intelligent system and provide a framework for understanding the brain.

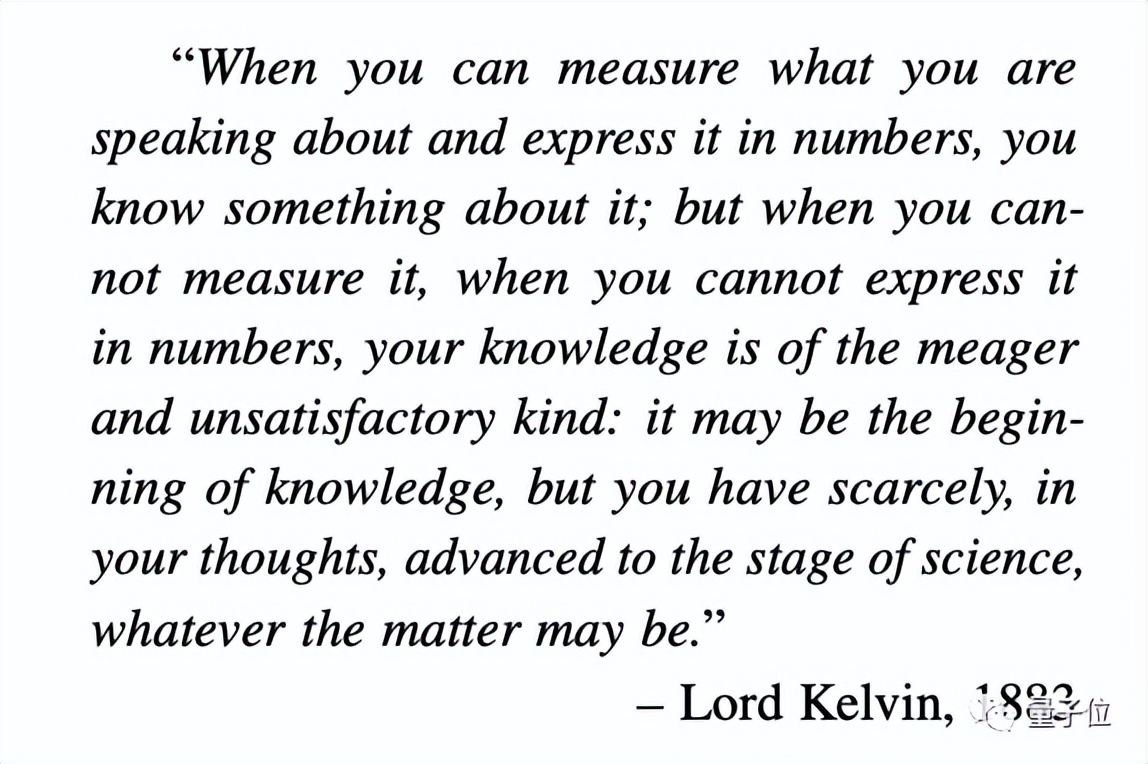

In the end, they ended the words of thermodynamics.

The rough meaning is that only when you can measure and use numbers to represent what you are talking Phase.

One more thing

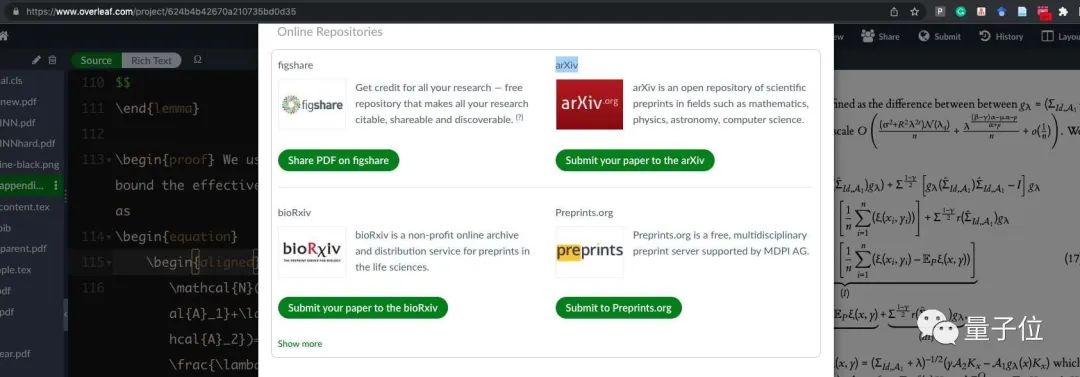

Interestingly, when submitting ARXIV, Professor Ma Yi still asked for help on the Internet:

Can't anyone write an interface? One -click to submit the paper. Instead of spending a lot of time to modify the paper.

Netizens also supported: using Latex compilers instead of PDFlateX.

There are also people directly.

Well, friends who are interested can poke the link below to learn more ~

Thesis link: https://arxiv.org/abs/2207.04630 Reference link: [1] https://weibo.com/u/3235040884?profile_ftype=1&IS_ALL=1 #1657668935671 [2] https://twitter.com/ Yimatweets/status/1546703643692384256 [3] https://twitter.com/dorists/status/15467225091534851

- END -

[Grasping project investment to promote high -quality development] Wang Rui: Continue to promote the reform of "decentralization of management" to help Gaoping high -quality leapfrog development

Develop topics and reform and do articles. The Twelfth Party Congress report of th...

The mortality rate is as high as 80%!This summer, this disease must be vigilant

The last few daysThe high temperature in the south is frequently rushed to the hot...