AI is crazy!The accuracy rate of the high number test is 81%, and the results of the competition are more than a computer doctorate

Author:Quantum Time:2022.07.01

Mengchen Fengjue from the quantity of the Temple of Temple | Public Account QBITAI

The high number of exams is not good, I don't know how many people's nightmares.

If you say that you are not as good as AI, is it more difficult to accept?

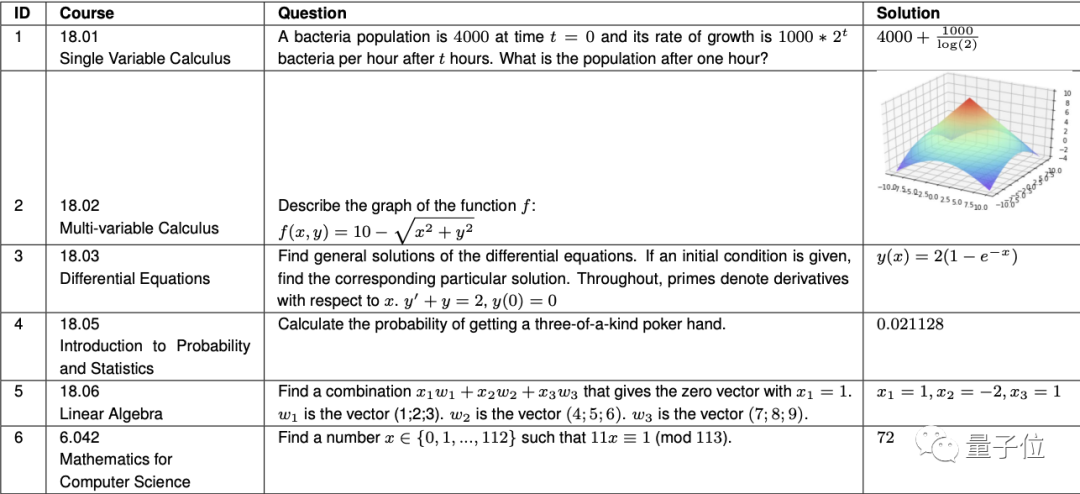

That's right, Codex from OpenAI has reached 81.1%in the 7 -door high -number test of MIT, and proper MIT undergraduate level.

The course range from primary calculus to differential equations, probability theory, and linear algebra. In addition to calculation and even drawing.

This incident has recently appeared on Weibo hot search.

△ "Only" scored 81 points, the expectation of AI is too high, right?

Now, the latest big news came from Google:

Not only mathematics, our AI has even scored the highest score in the entire science and engineering science!

It seems that in the incident of cultivating "AI as a questioner", technology giants have already rolled up a new height.

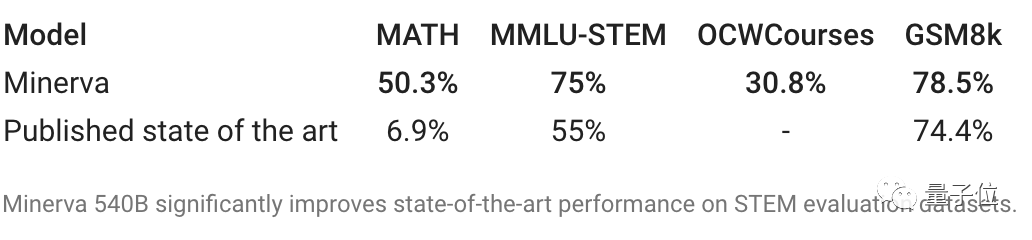

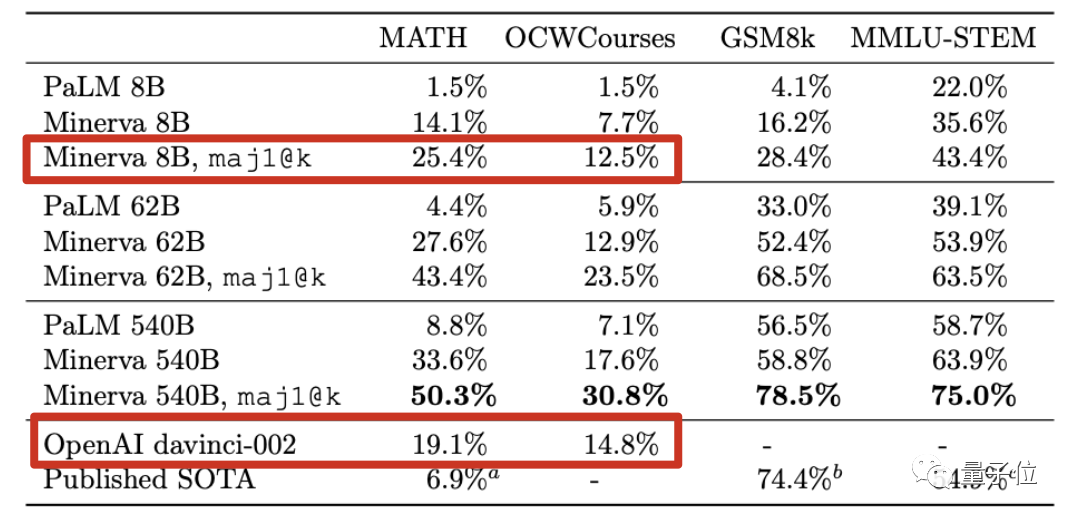

Google, the latest AI, took four exams.

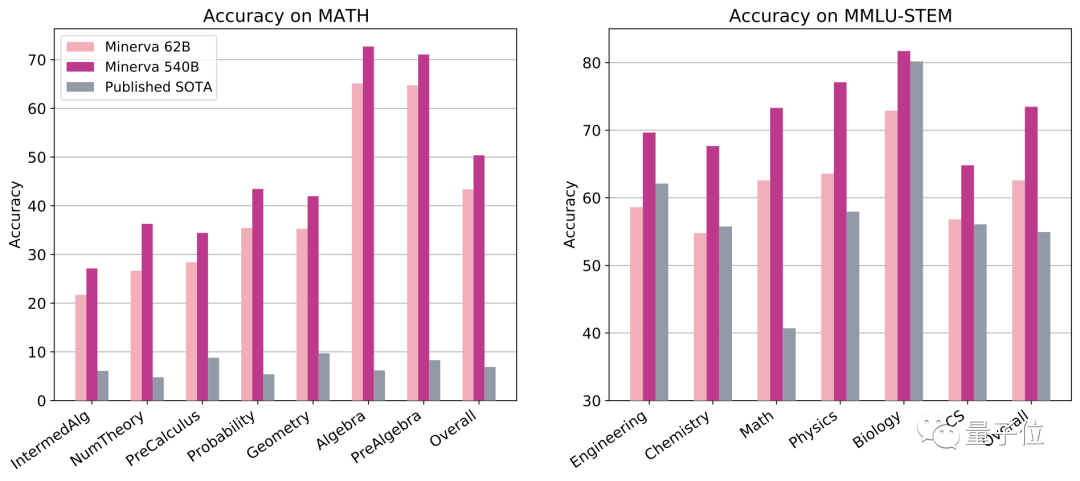

MATH, the mathematics competition, only three IMO gold medals in the past had 90 points, and ordinary computer doctors could only get about 40 points.

As for other AI as the subject, the best score in the past is only 6.9 points ...

But this time, Google's new AI scored 50 points, which is higher than computer doctors.

The comprehensive examination MMLU -TM contains countless science, electronic engineering, and computer science. The difficulty of the topic reaches high school or even university level.

This time, Google AI's "full blood version" also scored the highest score in the subject, which directly raised the score of about 20 points.

The primary school mathematics question GSM8K directly raised the results to 78 points. In contrast, the GPT-3 has not passed (only 55 points).

Even courses such as solid chemistry, astronomy, micro -division equations and narrow theory of relativity of MIT undergraduate and graduate studies, Google's new AI can answer nearly one -third of the more than 200 questions.

The most important thing is that it is different from Openai to obtain a high score of mathematics with "programming skills". Google AI this time is the way to "think like people" -

It only endorses and does not do questions like a liberal arts student, but has a better science and technology problem -solving skills.

It is worth mentioning that Lewkowycz also shared the highlights that did not write in a paper:

Our model has participated in this year's Polish mathematics college entrance examination, and the scores are higher than the national average.

Seeing this, some parents couldn't sit still.

If I told me my daughter, I was afraid she would use AI to do homework. But if she didn't tell her, she would not be prepared for the future!

From the perspective of the industry, it is the most amazing place for this study to rely on language models to do hard codes to reach this level.

So, how did this do it?

AI Crazy Reading 2 million papers on ARXIV

The new model Minerva comes from the general language model PALM in the Pathway architecture.

Further training on the basis of 8 billion, 60 billion and 540 billion parameters PALM models, respectively.

Minerva is completely different from codex.

The method of Codex is to rewrite each math problem into a programming question, and then solve it by writing code.

Minerva reads the papers, and understands mathematical symbols in a way of understanding natural language.

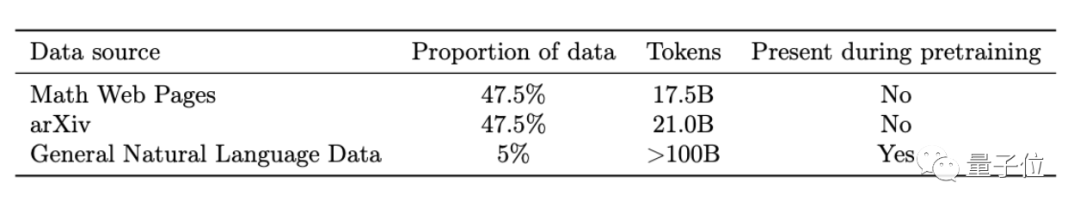

Continue training on the basis of PALM. There are three new data sets: three parts:

There are mainly 2 million academic papers collected on ARXIV, a web page with a Latex formula for 60GB, and a small part of the text that has been used in the PALM training phase.

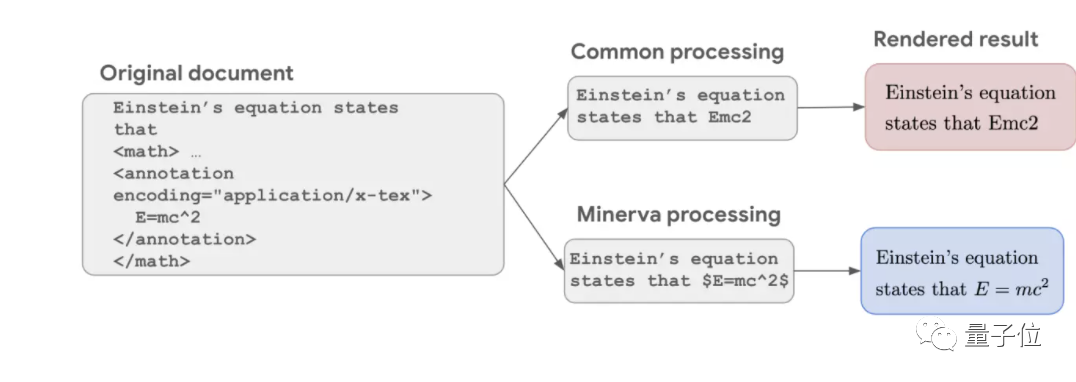

The usual NLP data cleaning process will delete the symbols only to keep pure text, resulting in incomplete formulas. For example, only EMC2 is left in Einstein's famous mass energy equation.

But Google retains all the formulas this time, and walks the TranSFormer's training program like pure text to let AI understand the symbols like understanding language.

Compared with the previous language model, this is one of the reasons why Minerva performs better on mathematics.

However, compared with AI specializing in mathematical problems, Minerva's training does not have an explicit underlying mathematical structure, which brings a disadvantage and advantage.

The disadvantage is that the correct answer may be obtained by the wrong steps that may occur in the wrong step.

The advantage is that it can adapt to different disciplines. Even if some problems cannot be expressed in regular mathematical languages, they can also be solved in combination with natural language understanding.

At the AI reasoning stage, Minerva also combines multiple new technologies developed by Google recently.

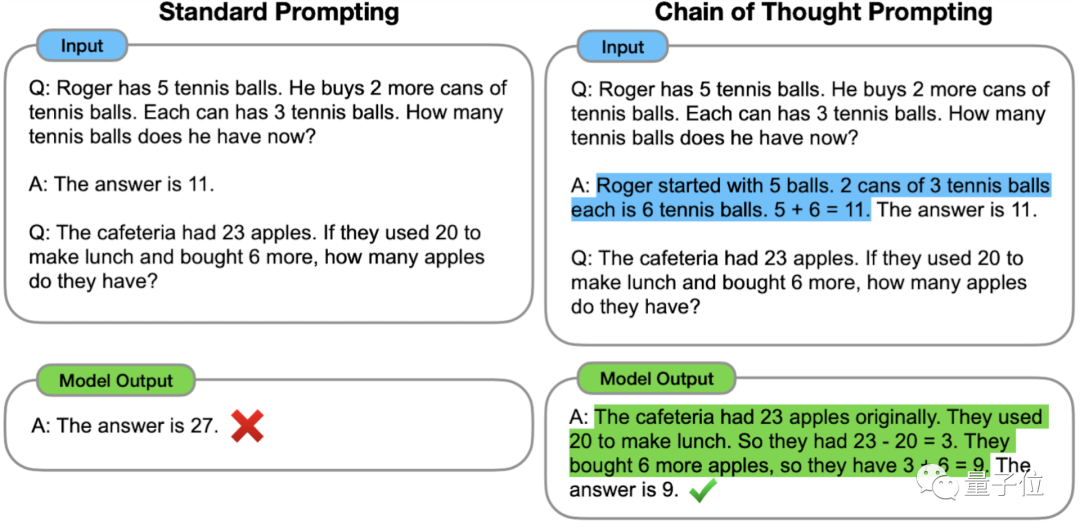

First of all, Chain of Thought Thinking Link Tips, which was proposed by the Google brain team in January this year.

Specifically, it is to guide a sample to answer the question while asking questions. AI can adopt a similar thinking process when doing the question, and correctly answer questions that would be wrong.

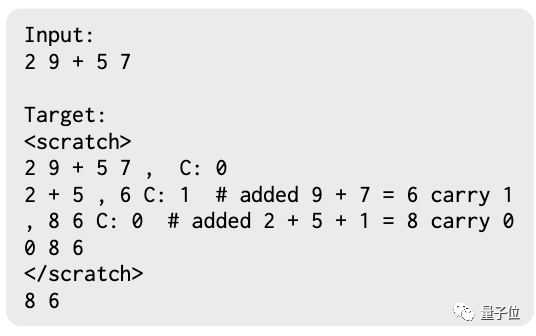

Then there is the scrathpad draft paper method developed by Google and MIT to allow AI to temporarily store the intermediate results of step -by -step computing.

Finally, there are most voting methods of Majority Voting, which were only published in March this year.

Let AI answer the same question multiple times, and select the most frequently in the answer.

After all these techniques are used, Minerva with 540 billion parameters has reached SOTA in various test concentrations. Even the 8 billion parameter version of Minerva can reach the latest updated DavinCi-002 version level of GPT-3 in the issue of competition mathematical questions and MIT open classes.

Having said so much, what questions can Minerva do?

In this regard, Google also opened a sample set, let's take a look.

Mathematical chemical omnipotence, even machine learning will

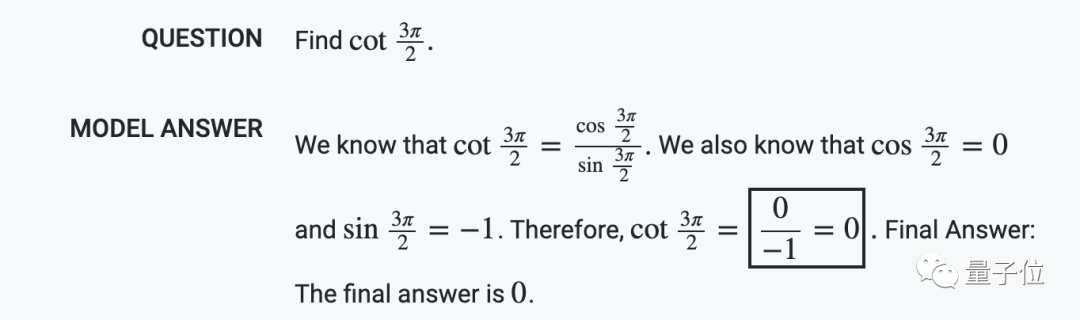

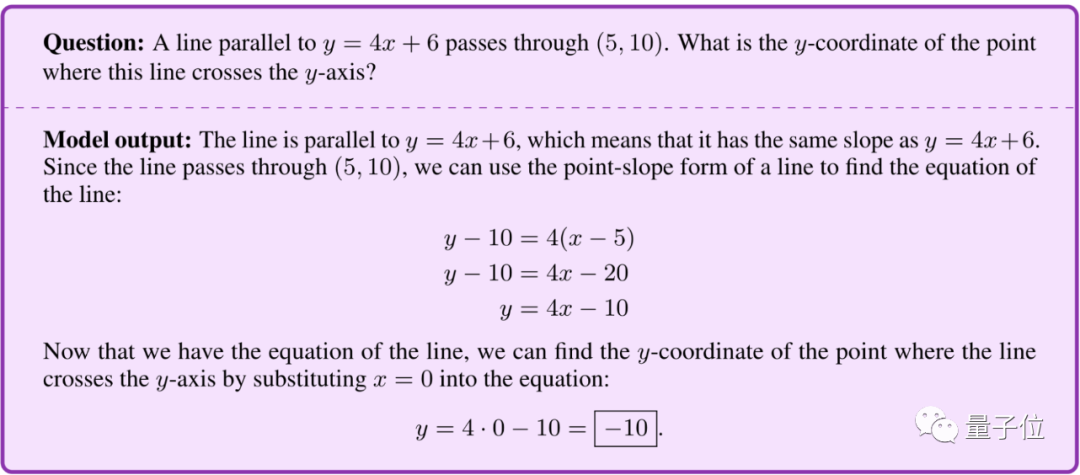

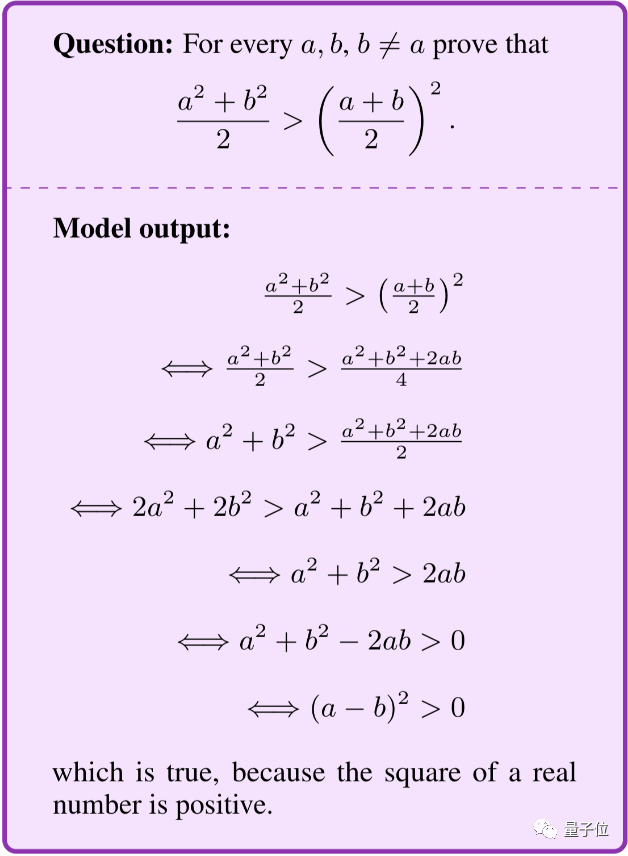

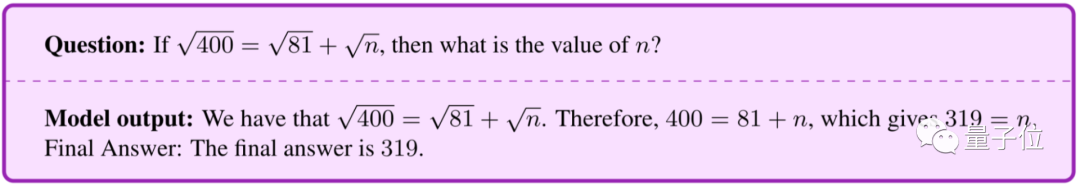

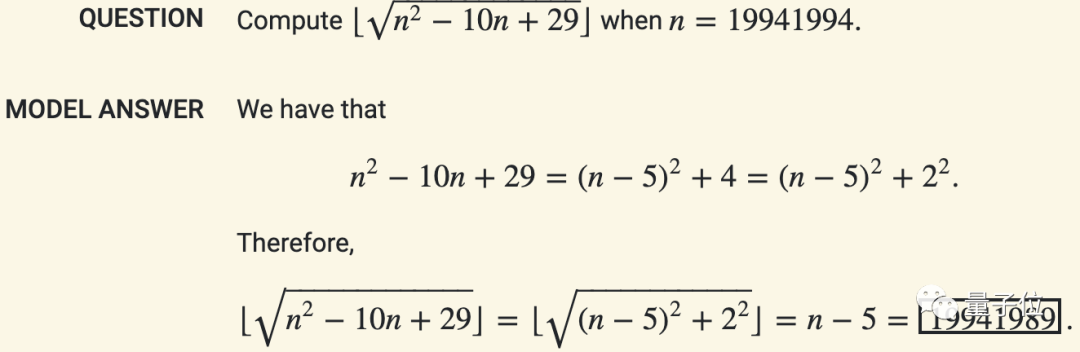

Mathematically, Minerva can calculate the value according to the steps like humans, rather than direct violence.

For application questions, you can list the equations yourself and simplify.

You can even derive proof.

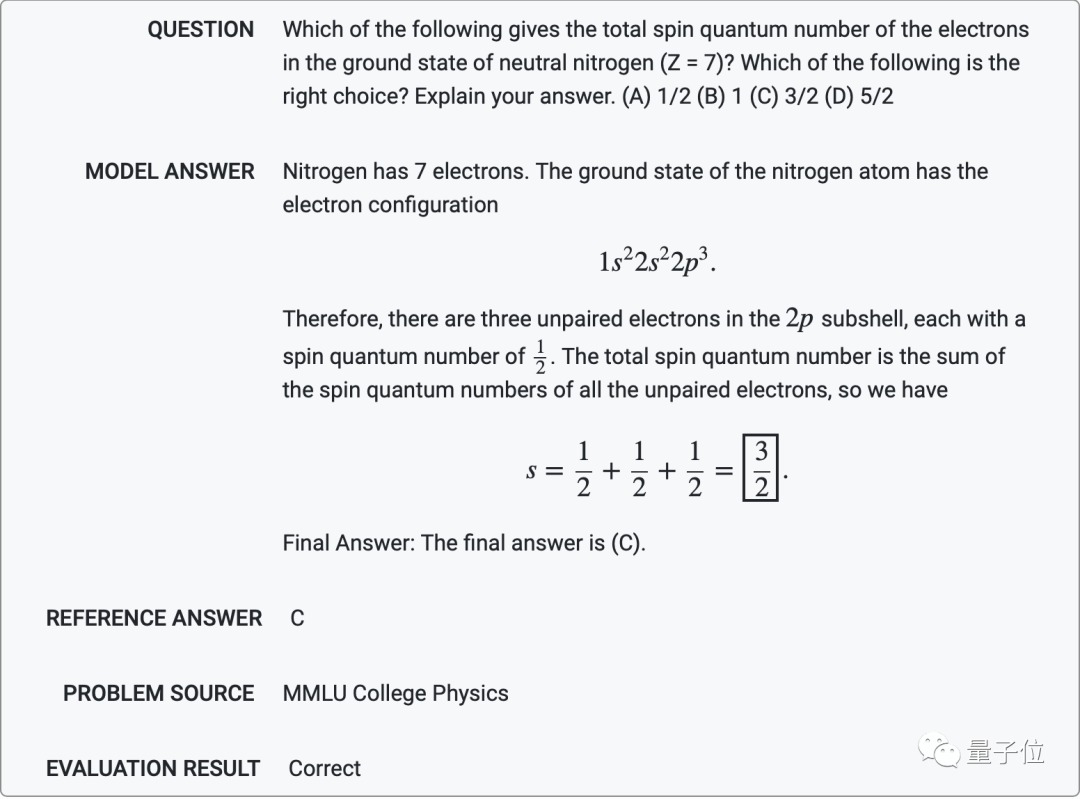

Physically, Minerva can find university levels such as neutral nitrogen -based (Z = 7) electrons.

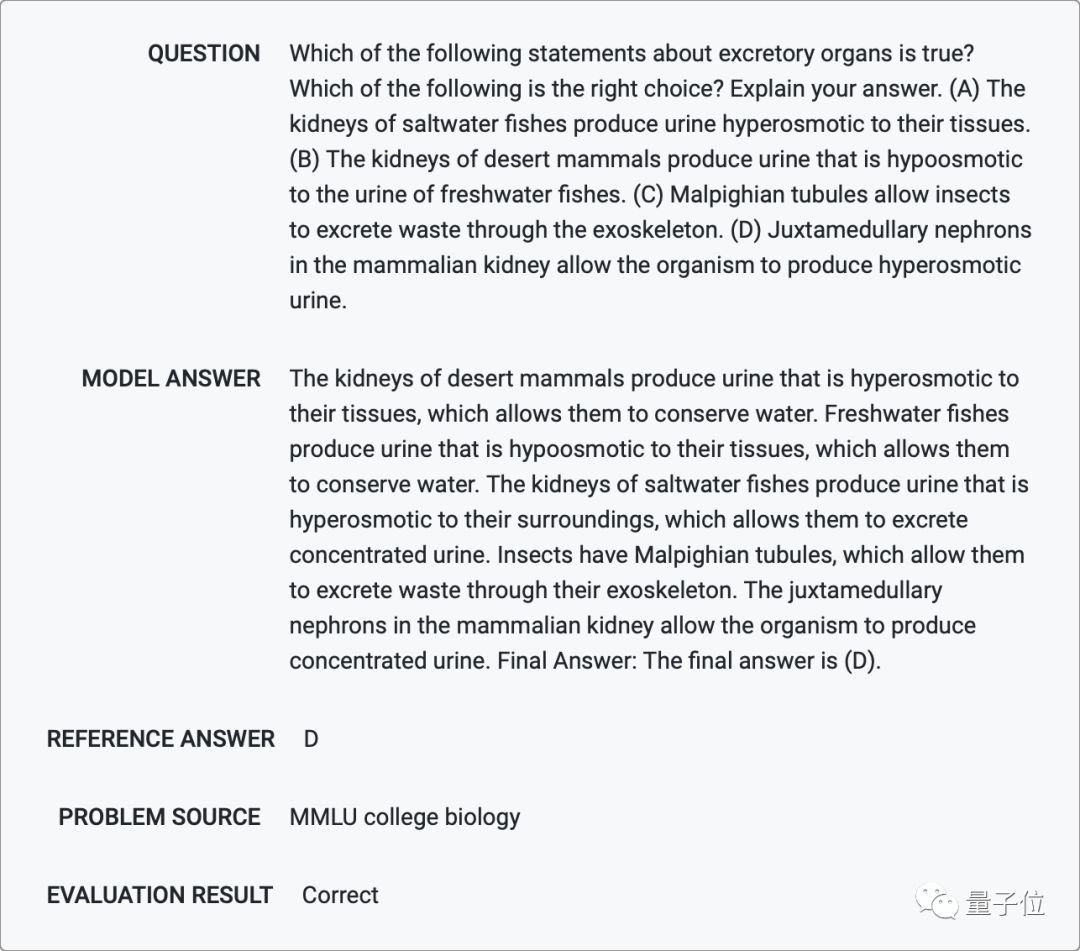

In biology and chemistry, Minerva can also do various choice questions with language understanding.

Which of the following forms of mutation has no negative effect on the protein formed by the DNA sequence?

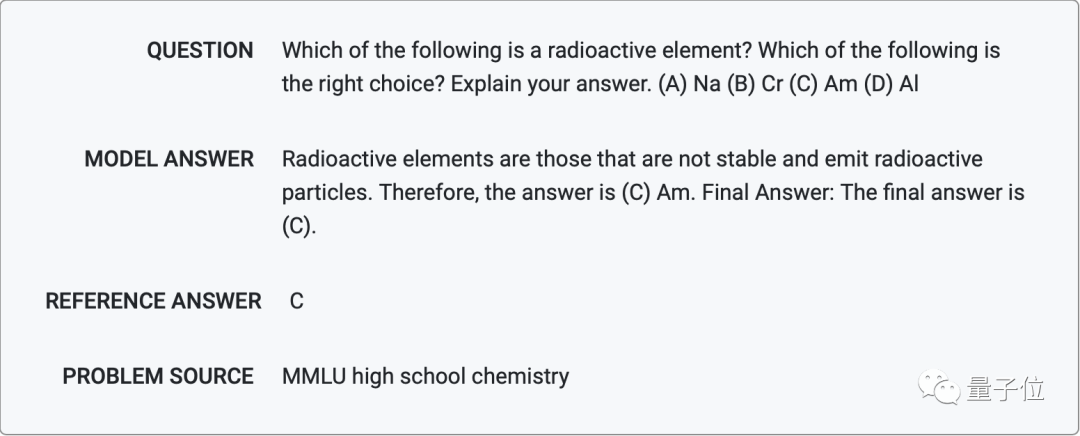

Which of the following is radioactive elements?

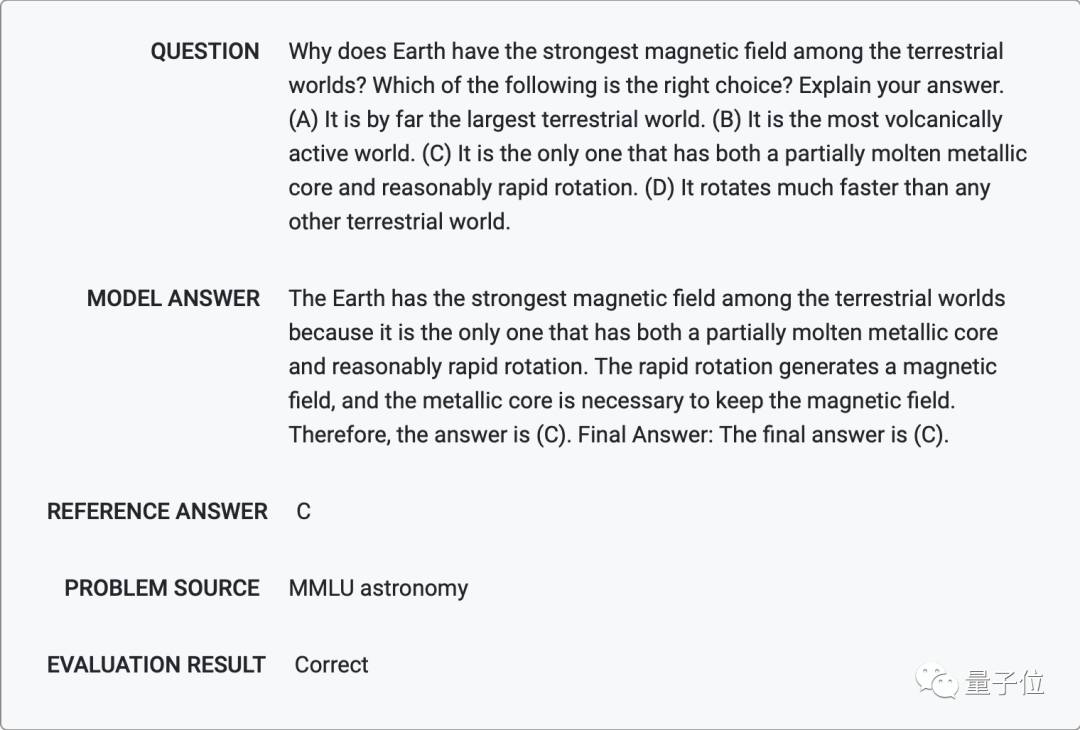

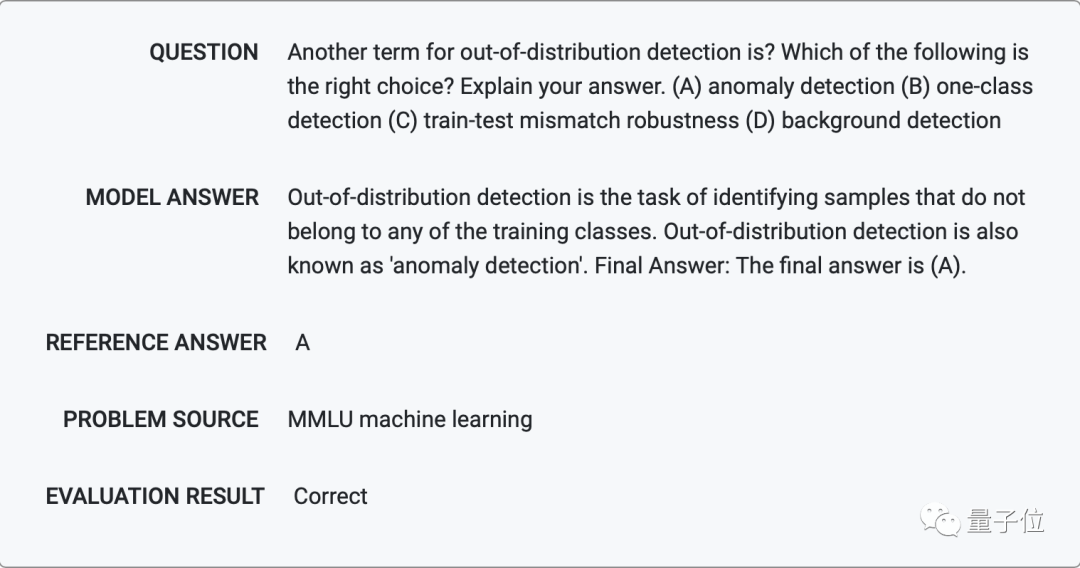

And astronomy: Why does the earth have a strong magnetic field?

In terms of machine learning, it explains the specific meaning of "distributed external sample detection", which correctly gives another saying that gives this term.

Native

However, Minerva sometimes makes some low -level errors, such as eliminating the √ on both sides of the equivalence.

In addition, Minerva's inference process is wrong, but the "false positive" situation of the result, such as the following, has 8%possibilities.

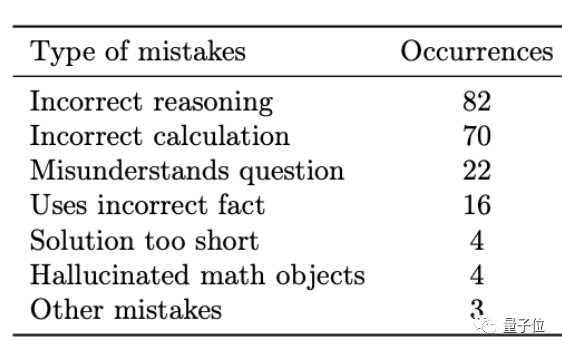

After analysis, the team found that the main error form comes from calculation errors and reasoning errors. Only a small part comes from other circumstances such as understanding errors and using errors in the steps.

Among them, calculation errors can be easily solved by accessing external calculators or Python interpreters, but other types of errors are not easy to adjust because the scale of neural networks is too large.

In general, Minerva's performance surprised many people, and they asked APIs in the comment area (unfortunately, Google has not planned publicly).

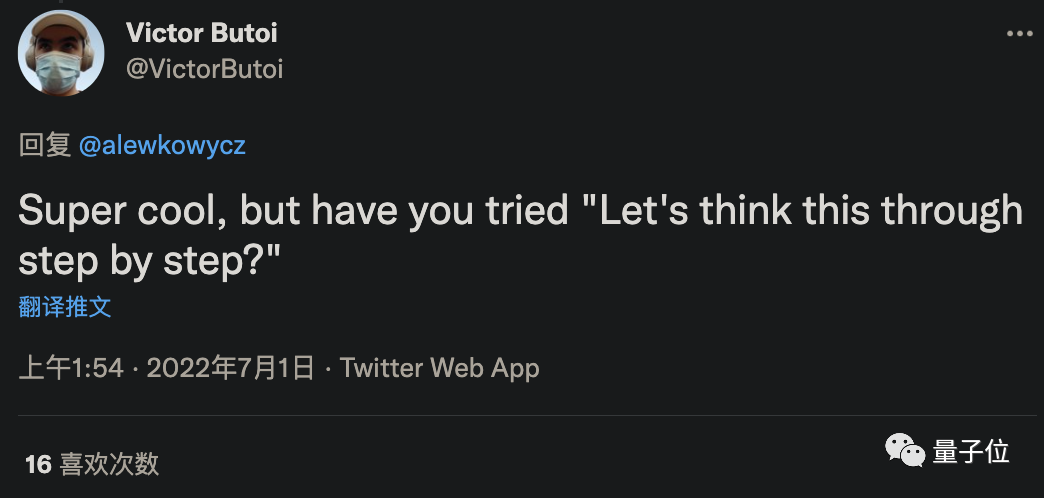

Some netizens thought that in addition to the "coaxing" Dafa, which allowed GPT-3 to solve the problem of 61%a few days ago, maybe can the accuracy rate be improved?

However, the author's response is that the method of coaxing is zero -sample learning, and even if you are strong, you may not be compared to less samples with 4 examples.

Some netizens have suggested that since it can do questions, can it be reversed?

In fact, using AI to give college students a question, MIT has been doing Openai.

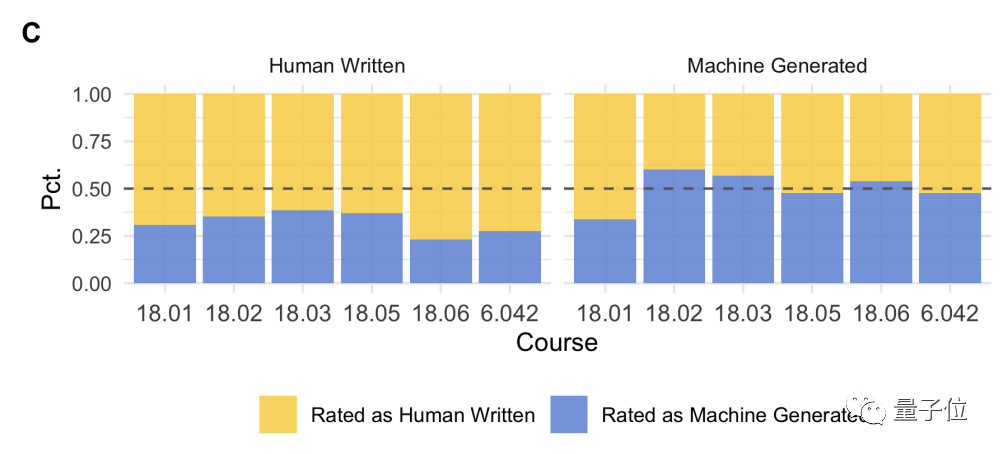

They mixed the question from human beings with the AI questions and found students to do questionnaires. It is difficult for everyone to distinguish whether a question was from AI.

In short, except for AI, I am busy reading this paper.

The students are looking forward to using AI to do homework one day.

Teachers also look forward to using AI out of the paper one day.

Thesis address: https://storage.googleapis.com/minerva-paper/minerva_paper.pdf

Demo address: https://minerva-demo.github.io/

Related thesis: Chain of Thought https://arxiv.org/abs/2201.11903scrthpads https://arxiv.org/abs/2112.00114majority voting https://arxiv/abs/2203.111717171717171717171717171717171717171717171717171717171717171711

参考链接:https://ai.googleblog.com/2022/06/minerva-solving-quantitative-reasoning.htmlhttps://twitter.com/bneyshabur/status/1542563148334596098https://twitter.com/alewkowycz/status/1542559176483823622

- END -

Academician of Bao Weimin attended the development and construction consultation meeting of the Western Power Space College

On the morning of July 2nd, the Development and Construction Consultation Meeting ...

In the invisible, she touched the netizens of the entire Internet

01She invisible, she graduated!Blind Graduate Earns Master ’s DegreeRecently, Wuh...