West Electric Smart Students have achieved better results in the CVPR 2022 competition

Author:Xi'an University of Electroni Time:2022.06.23

Recently, the 2022 Computer Visual and Mode Recognition Conference (CVPR 2022) was held. As the computer vision top CVPR 2022 officially kicked off, multiple event awards have been settled one after another. The Artificial Intelligence Institute of Xi'an University of Electronic Science and Technology has achieved good results. In Jiao Li Cheng, Professor Liu Fang, Professor Wang Shuang, Professor Qu Yan, Dr. Liu Xu, Dr. Quan Dou and team doctoral students Yang Yuting, Wang Yuhan, Huang Zhongjian, Zhao Jiaxuan, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Liu Yang, Under the guidance of Song Xue, Geng Xueli, Bao Yueyue, Ma Yanbiao, You Chao, Zhang Zixiao, Ma Mengru, etc., the college participating team won 2 championships, 2 runner -up, and 2 -third -year -old awards in the four competitions of CVPR 2022. In the top five of the list, 14 participating teams were shortlisted for the top ten on each top of each competition. All the award -winning teams have received an invitation to the organizer of the competition and will report or display the award -winning competition method at the Workshop conference. This competition was supported by the National Natural Science Foundation of China, the Ministry of Education's innovation team, the national discipline innovation of the intelligence base, the national double first-class discipline construction project and the Chinese artificial intelligence society-Huawei MindSpore academic reward fund and other support.

The student team of the 2020 master's degree Graduate Lu, Cao Guojin, etc. won the CVPR 2022 Woodscape Fisheye Object Detection Challenge for Autonomous Driving, and the student team composed of 2021 graduate students Li Chenghui, Li Chao, and Tan Xiao won the seasons of this competition.

Lu Xiaoqiang, Cao Guojin

Li Chenghui, Li Chao, Tan Xiao

Woodscape is a multi -task and multi -lens fish eye image data set in the autonomous driving scenario. The current challenge is designed to promote the development and progress of 2D target detection technology on fish -eye images. The predetermined target category is vehicles, pedestrians, bicycles, traffic lights, and traffic signal signs common in the road. Compared with conventional images, fish -eye images have serious non -linear distortion and significant standards such as standards.

In this regard, based on the original Yolov4 framework, the champion team introduced a multi-headed self-attention mechanism to design a MHSA-Darknet that can extract more context information and differentiated features as BackBone, and uses BIFPN to achieve cross-scale feature fusion. Yuyuan Yolov4 algorithm has higher accuracy and stronger robustness, which effectively relieves problems including the difference in target size, non -linear distortion, and complex background environment interference. In addition, the team also adopted a model integration strategy to improve the final detection accuracy.

The third place team uses the Swin Transformer V2 to extract the main characteristics of the main trunk, and the combined mixed mission -level joint inspection header HTC+to detect the objects in the fish eye image. SWIN Transformer introduced several important visual priorities into the Transformer encoder, including hierarchical structure, locality and translation invariance, which combines their strength. HTC+improves information flow by combining levels and multi -tasks at each stage, and uses the space environment to further improve accuracy. In the final reasoning, use Soft-NMS to filter the excess detection box. Finally, through the weighted box fusion (WBF), the confidence score of all detected boundary boxes is used to build an average box to enhance the detection accuracy.

The student team composed of doctoral graduate students Zhao Dong, Wang Zining, Zang Qi, and Ye Xiulian won the CVPR 2022 Agriculture-Vision Cropharves.

Zhao Dong, Wang Zining, Zang Qi, Ye Xiulian

Cropharvest Challenge is whether the area represented by a multi -spectrum time serial signal contains crops. Each time sequence signal provided is a 12 -month time sequence. Among them, it contains 18 features per month, which represents the aggregation value of four different remote sensing data sets from the 30 -day window from April to March. Observing the competition data found that the change trend of time sequence signals in different months can better feedback whether to include crops. For example, as the crop grows, a area with crops will reflect different spectral values in different months. To this end, the team members use the self -attention mechanism in the Transformer model to capture the trend and potential relationship of different month's spectral signals, and design a pre -training strategy to fully improve the performance of the Transformer model.

The student team composed of Song Xinkan, Yang Yiyuan, Liu Chang, Qiu Can, Zhang Le, and Gao Yingjia won the runner -up of CVPR 2022 Tiny ActionS Challenge [Track 1: Recognization Task].

Song Xinki, Yang Yiyuan, Liu Chang, Qiu Can, Gao Yingjia, Zhang Le

Tiny ActionS Challenge is a video action detection challenge organized by the University of China Florida, which aims to detect multiple categories of motion in low -resolution videos in the real world. The competition data set is Tinyvirat-V2 video dataset. The videos are extracted from the surveillance video of the real world, including 26,355 instances, of which 16,950 are training instances, 3308 are verification instances, and 6097 are test instances. The dataset contains low -resolution videos from 10 × 10 pixels to 128 × 128 pixels. The average length of the video is about 3 seconds. For Tiny Actions Challenge micro-action recognition competition, the real environment low-resolution data set Tiny-Virat-V2 is proposed. To improve video quality. The training process is divided into two stages: the first stage, the participating teams use 6 models to train the data set, which are R (2+1) D, Slowfast, CSN, X3D, TimesFormer and Video. In order to improve the model performance, the KineCTS-400 dataset is pre-training to obtain pre-training weights. In the second stage, after the model is basically converged, the model fusion is performed, and the performance of the model is measured by a ten -fold cross -verification method. According to the prediction score, the binary classification training is performed on the problem of serious types of uneven category, and the results of the prediction scores are filtered. In the end, the second place in the ranking is 0.8732. The student team composed of Gao Zihan, Ma Tianzhi, and He Wenxin won the CVPR 2022 Vizwiz Grand Challenge [Track 1: Visual Question Answering Challenge Task]; the student team composed of Lu Xiaoqiang and Cao Guojin won the third place in the same track.

Gao Zihan, Ma Tianzhi, He Wenxin

The main task of Vizwiz VQA 2022 Visual Q & A Circuit is to train the model and predict the answer to the photos and the questions raised by the blind people. The runner-up team uses VINVL to extract the image, and uses the fusion model of X-VLM, Albef, Oscar, and OFA. In addition, when the confidence of the fusion model prediction is low, and the answer to the SA-M4C prediction is located outside the Answer List, the result is replaced, and the generative answer is used as the final result. Finally, the multi -version results are used to cover the problem of lower scores to improve the final accuracy rate.

The third runner -up team proposes the VQA algorithm based on the answer area of visual language pre -training. Unlike the traditional VQA algorithm regarding VQA as a multi -label classification problem, the team uses a self -regulating decoder to generate the final answer. In addition, the global image features include too many areas that are not related to text, and in addition to increasing the additional calculation burden based on pre -use detectors to extract the characteristics of the target, some characteristics that are not related to text have also been introduced. To this end, the team proposes the answering area guidance algorithm. First of all, the question -image -answer to the input to reference semantic segmentation model to obtain the accurate answer area in the image, and then guide the model of the answer area through the attention module guidance model. Get the result in the device.

In addition to the above four topics, team students have also achieved excellent results on other tracks. The top 5 UG2+ Track 1 Object Detection in Haze won the fourth place in the track (with certificates) by the student team composed of Lu Xiaoqiang and Cao Guojin. Special SUPERVISED SEMANTIC Segmentation in Adverse Conditions Track 1: Supervable Segmentation in Adverse Conditions (NIGHT) Student Team on the list of students on the list of students on the list of the ranking of the ranking of the list of the list is No. 5. Track 2: Supervisiond Semantic Segmentation in Adverse Conditions (Rain) ranked fourth in the list of student teams composed of Gao Zihan, Ma Tianzhi, and He Wenxin. The top seven unitedRTAINTY-AWARE Supervised Semantic Segmentation in Adverse Conditions (Rain) ranked third in the list of student teams composed of Wang Jiahao, Wang Hao, and Dong Jun. The top eight Hotel -IDBat Human Trafficking 2022 -FGVC9 ranks fourth in the list of student teams composed of Wang Jiahao and Wang Hao. Tournament 9 2022 AI City Challenge: Challenge Track 1: City-SCALE Multi-Camera Vehicle Tracking ranked eighth in the list of student teams composed of Zuo Yi, Wang Zitao, and Zhang Junpei. Ten THE ACDC Challenge 2022 Track 1: Normal-To-Advert Domain Adaptation on CityScapes → ACDC The third place in the list of the student team of He Pei. It is reported that CVPR is the abbreviation of IEEE Conference on Computer Vision and Pattern RECOGNITION, that is, IEEE International Computer Vision and Mode recognition conference, which is an academic conference once a year. The main content of CVPR is computer vision and mode recognition technology, and it is one of the three major computer vision conferences in the world. Academician Jiao Cheng, the School of Artificial Intelligence of Xi'an University of Electronic Science and Technology, has more than 30 years of experience in the field of remote sensing. Smart students have repeatedly achieved good results in various professional competitions. Let students quickly improve their scientific research capabilities and strengthen academic exchanges through academic competitions are one of the powerful measures for talent training of the Academy of Artificial Intelligence. "Sai Middle School" not only allows students to quickly understand the relevant knowledge of the field and improve the motivation of students 'scientific research, but also exercise students' organizational coordination and pressure resistance. At present, the team has won 12 championships, 16 runners -ups and 9th third -runners in the past three years of Igarss, CVPR, ICCV, and ECCV international competitions in the past three years.

(Source: Xidian News Network)

- END -

Wuhan Studio Rankings | Fine Examinations Expert Expenses to take 30 points for more than four points to do a good job

MentalityThe quality of the mentality directly determines that your test efficienc...

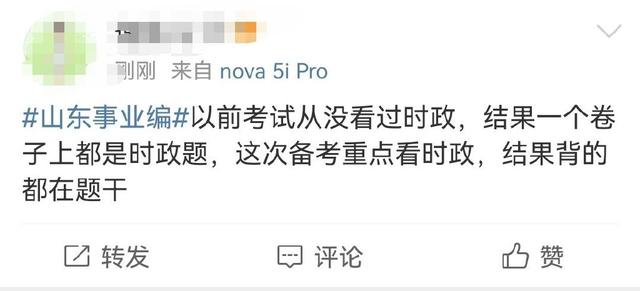

"Difficulties" on Shandong business compilation test questions!Test a sentence, an ice pier, and Su Shi's corn paste

In 2022, the written test of the comprehensive positions of Shandong Provincial Pu...