6 Python framework recommendations for explaining AI (XAI)

Author:Data School Thu Time:2022.08.08

Source: Deephub IMBA

This article is about 1500 words, it is recommended to read for 5 minutes

This article introduces 6 Python frameworks for interpreted.

With the development of artificial intelligence, in order to solve challenging problems, people have created more complex and opaque models. AI is like a black box that can make a decision by themselves, but people do not know the reason. Create an AI model, input data, and then output results, but there is a problem that we cannot explain why AI can draw such a conclusion. It is necessary to understand how AI is reached behind a certain conclusion, rather than only one result of one output without context or explanation.

Explaining is designed to help people understand:

How to learn?

What did you learn?

Why do you make such a decision for a specific input?

Is the decision reliable?

In this article, I will introduce 6 Python frameworks for explanatory.

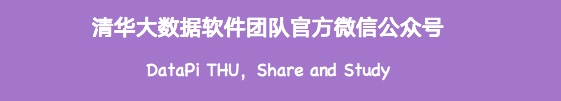

Shap

Shapley Additive Explanation (Shapley Additive Explanation) is a game theory method that explains any machine learning model output. It uses the classic Shapley value in the game theory and its related extensions to connect the best credit distribution with local interpretation (see the details and references of the paper for details).

The contribution of each feature to the model prediction of the data set is explained by the shavley value. The Shap algorithm of Lundberg and Lee was first published in 2017. This algorithm was widely used by the community in many different fields.

Install the Shap library with PIP or Conda.

# Install with pip PIP Install Shap # Install with Conda Conda Install -Conda -Forge Shap

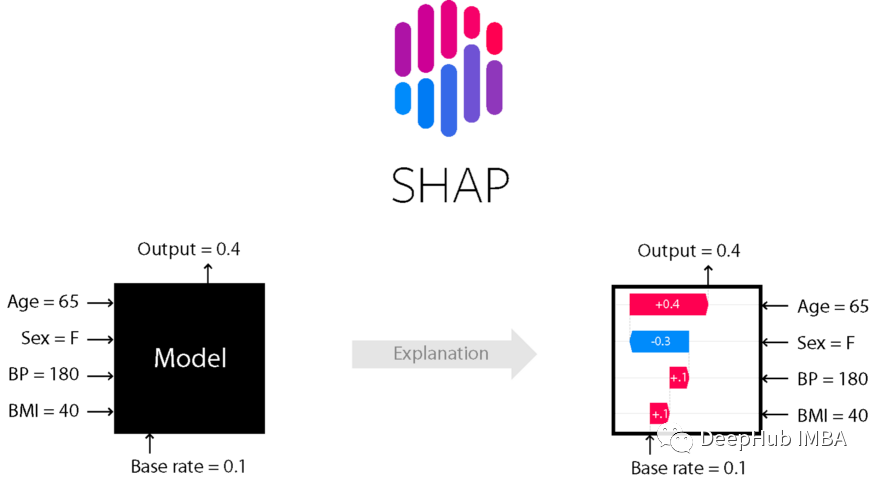

Use the shap library to build a waterfall map

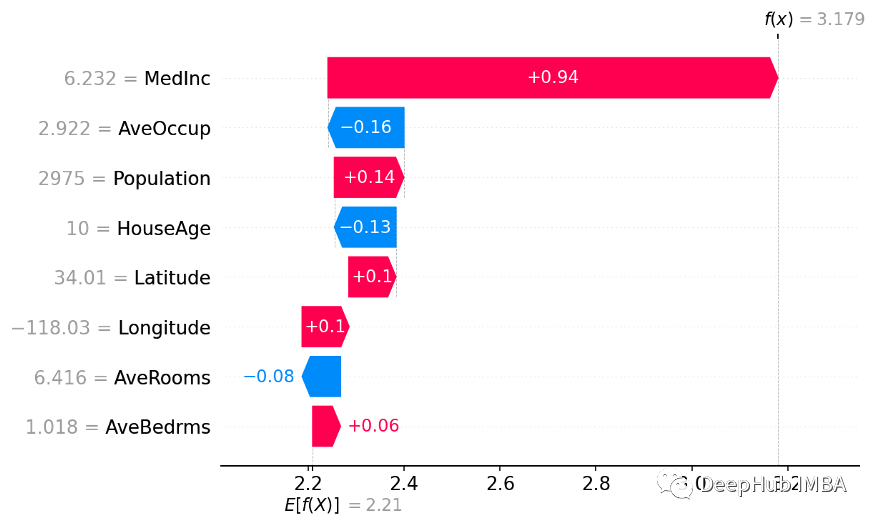

Use the shap library to build the Beeswarm diagram

Construction part of the dependencies using the shap library

Lime

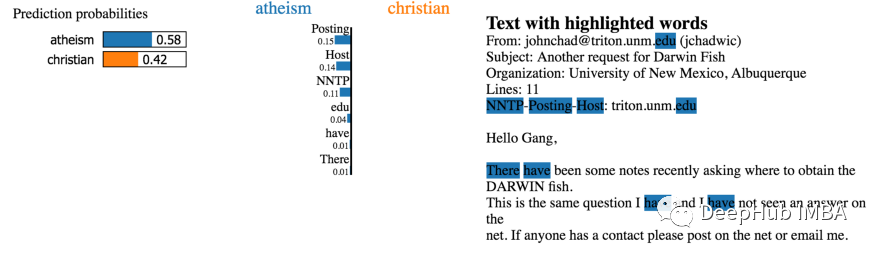

One of the earliest methods in explanatory areas is Lime. It can help explain what machine learning models are learning and why they predict in some way. Lime currently supports the interpretation of data, text classifiers and image classifiers.

Knowing why the model is predicted in this way is essential to adjust the algorithm. With the explanation of Lime, you can understand why the model is running in this way. If the model does not run according to the plan, it is likely to make an error in the data preparation stage.

Use PIP installation:

PIP Install Lime

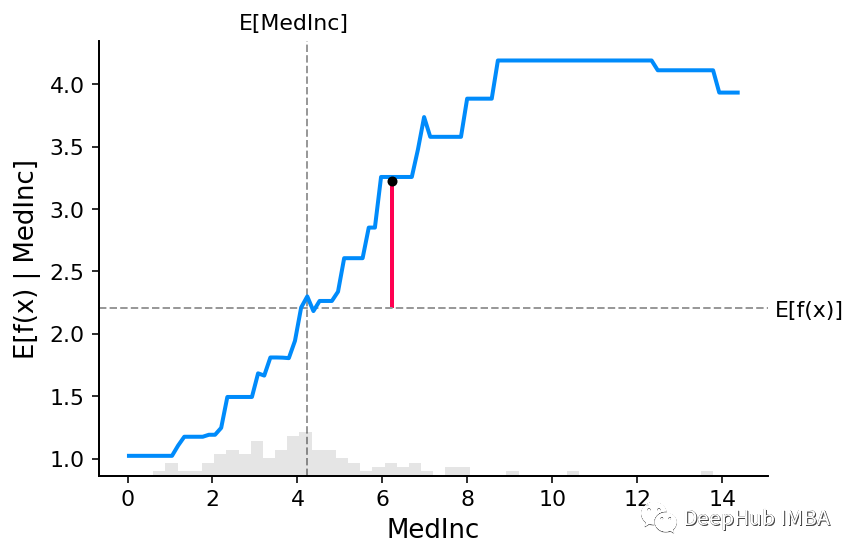

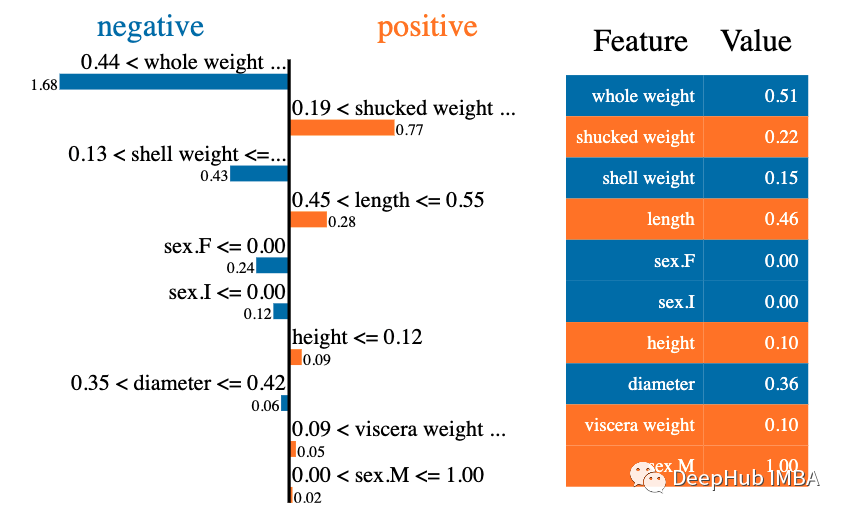

Local interpretation of Lime

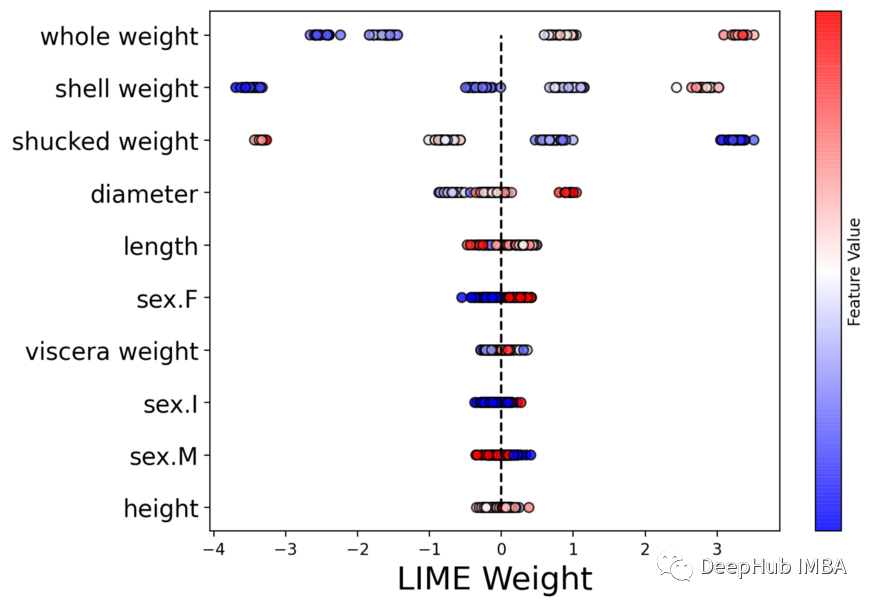

Lime built beeswarm diagram

Shapash

"SHAPASH is a Python library that allows machine learning to explain and understand everyone. SHAPASH provides several types of visualization, showing the clear label that everyone can understand. Data scientists can easily understand their models and easier Share the result. End users can use the most standard abstract to understand how the model is judged. "

In order to express the stories, insights, and model discoveries in the data, interaction and beautiful charts are essential. The best way to display and interact with AI/ML results to the results of business and data scientists/analysts is to visualize it and put them in web. The Shapash library can generate a transaction interactive dashboard and collect many visual charts. It is related to the explanatoryness of the shape/lime. It can use Shap/Lime as the back end, that is, it only provides better charts.

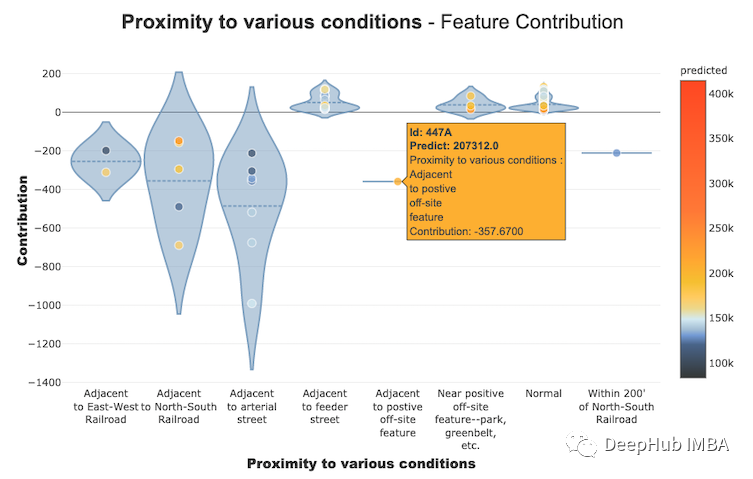

Use Shapash to build a characteristic contribution diagram

Interactive panel created by the Shapash Library

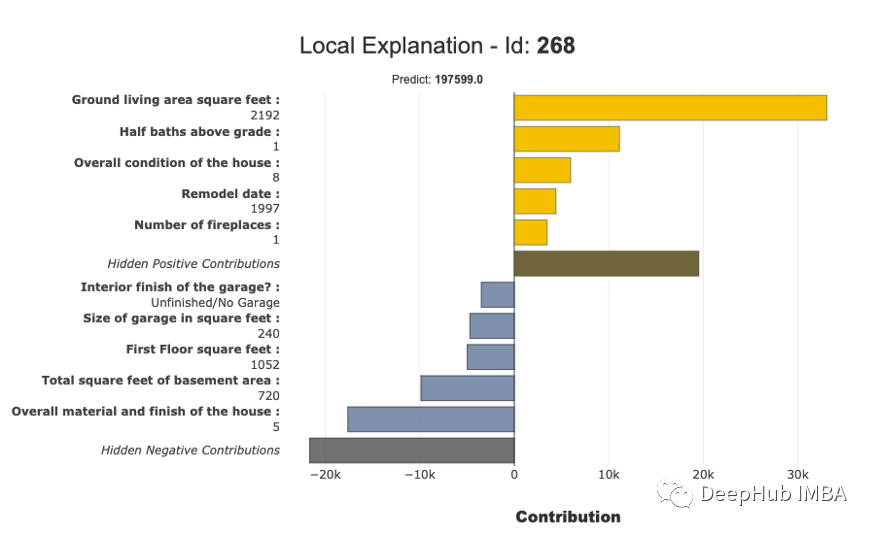

Local interpretation of Shapash

Interpretml

Interpretml is an open source Python package that provides researchers with machine learning explanatory algorithms. Interpretml supports training to explain the model (Glassbox) and explain the existing ML pipeline (Blackbox).

Interpretml shows two types of interpretability: Glassbox model -for explanatory design models (such as linear models, rules list, broad -can add models) and black box explanatory technologies -used to explain the presentation present There are systems (such as: partial dependencies, live). Use a unified API and encapsulate a variety of methods. It has a built -in, scalable visualization platform, which enables researchers to easily compare the explanatory algorithm. Interpretml also includes the first implementation of Explanation Boosting Machine. This is a powerful, explained, Glassbox model, which can be as precise as many black box models.

Local interpretation of interpretation of interpretation using interpretml built using the global interpretation diagram built by interpretml

Eli5

ELI5 is a Python library that can help debug machine learning classifiers and explain their prediction. Currently supporting the following machine learning framework:

scikit-learn

XGBOOST, LightGBM Catboost

Keras

ELI5 has two main methods to explain the classification or regression model:

Check the model parameters and explain how the model works globally;

Check the single prediction of the model and explain what model will make such a decision.

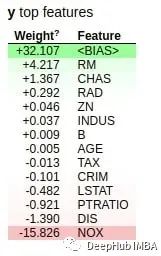

Use ELI5 library to generate global rights

Use ELI5 library to generate local weights

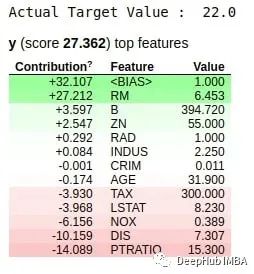

Omnixai

Omnixai (abbreviation of Omni Explained AI) is the Python library recently developed and open -source developed by Salesforce. It provides a full range of interpreted artificial intelligence and explained machine learning capabilities to solve several issues that need to be judged in the practice of machine learning models in practice. For data scientists and ML researchers that need to explain various types of data, models and interpretation technologies at various stages of the ML process, Omnixai hopes to provide a one -stop comprehensive library to make the explanatory AI simple.

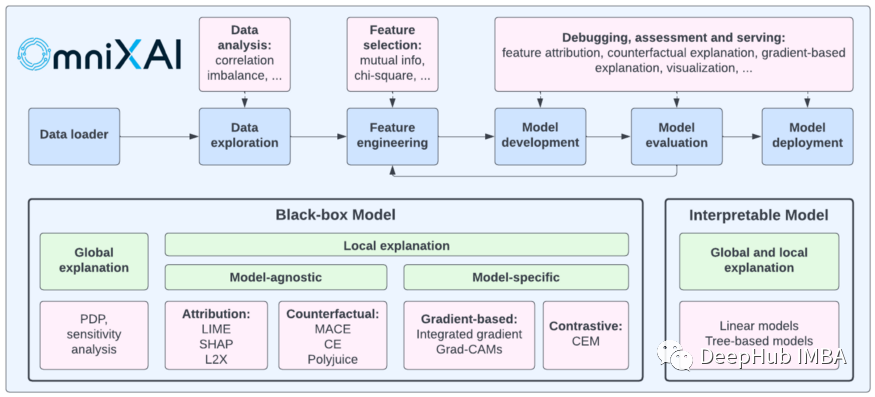

The following is a comparison between Omnixai and other similar libraries:

Finally, the official address of these 6 frameworks below:

https://shap.readthedocs.io/en/latest/index.html

https://github.com/marcotcr/lime

https://shapash.readthedocs.io/en/laatest/

https://interpret.ml/

https://eli5.readthedocs.io/

https://github.com/salesforce/omnixai

Author: MOEZ ALI

Edit: Huang Jiyan

- END -

Yu Xiaohui, Dean of China Information and Communication Research Institute: Digital transformation is the "compulsory course" of industry and enterprises

Image source: Tuwa CreativeEconomic Observation Network reporter Li Xiaodan In rec...

Digital people blow wrinkles, spring water, digital economy new momentum consumer market new trend

According to the calculation of market research institutions, the Institute of Leopard Institute, in 2030, the overall market size of the digital person in my country will reach 270 billion yuan.Digit